|

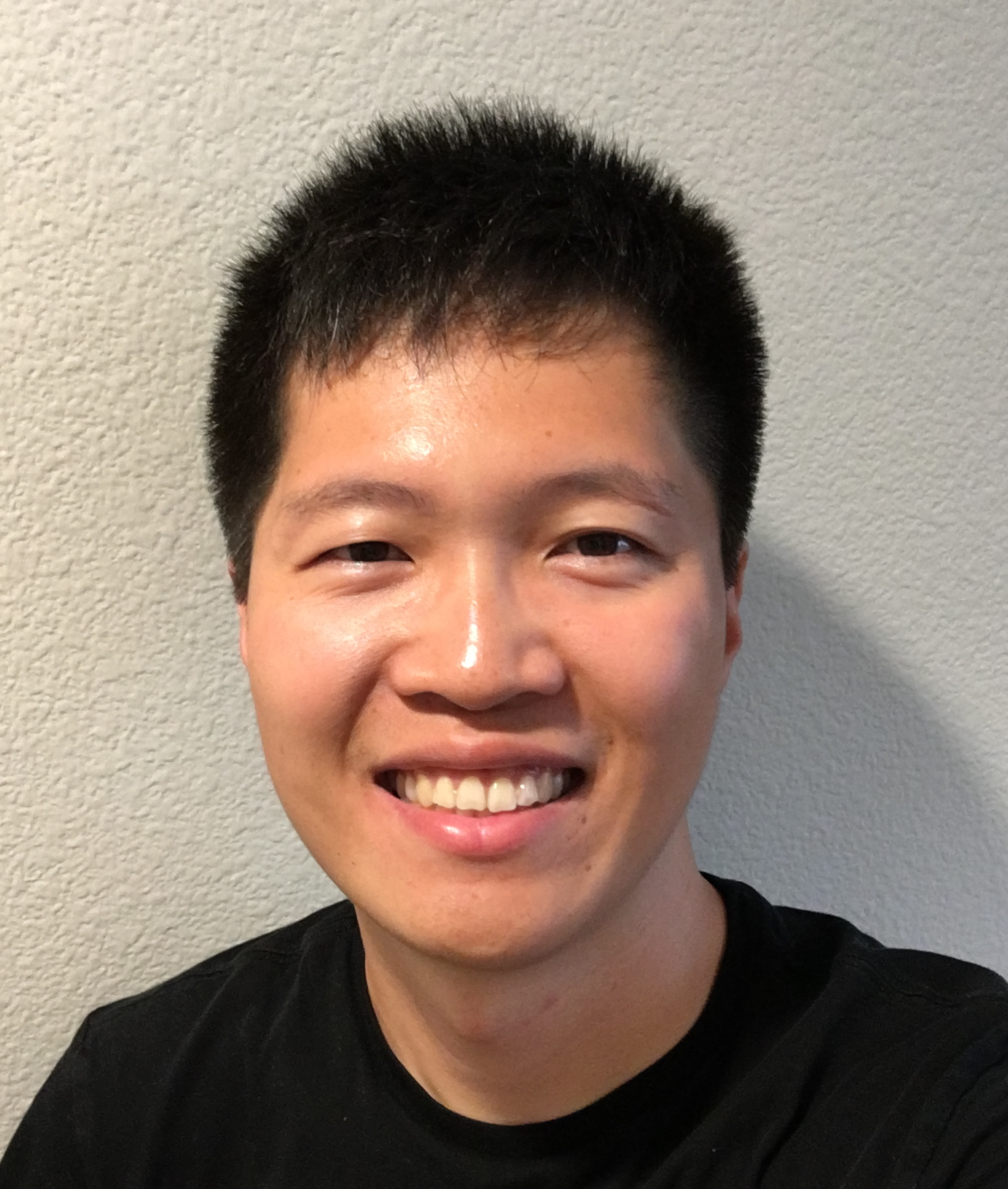

Yu-Hsiang Lin

Email: hitr2997925@gmail.com |

|

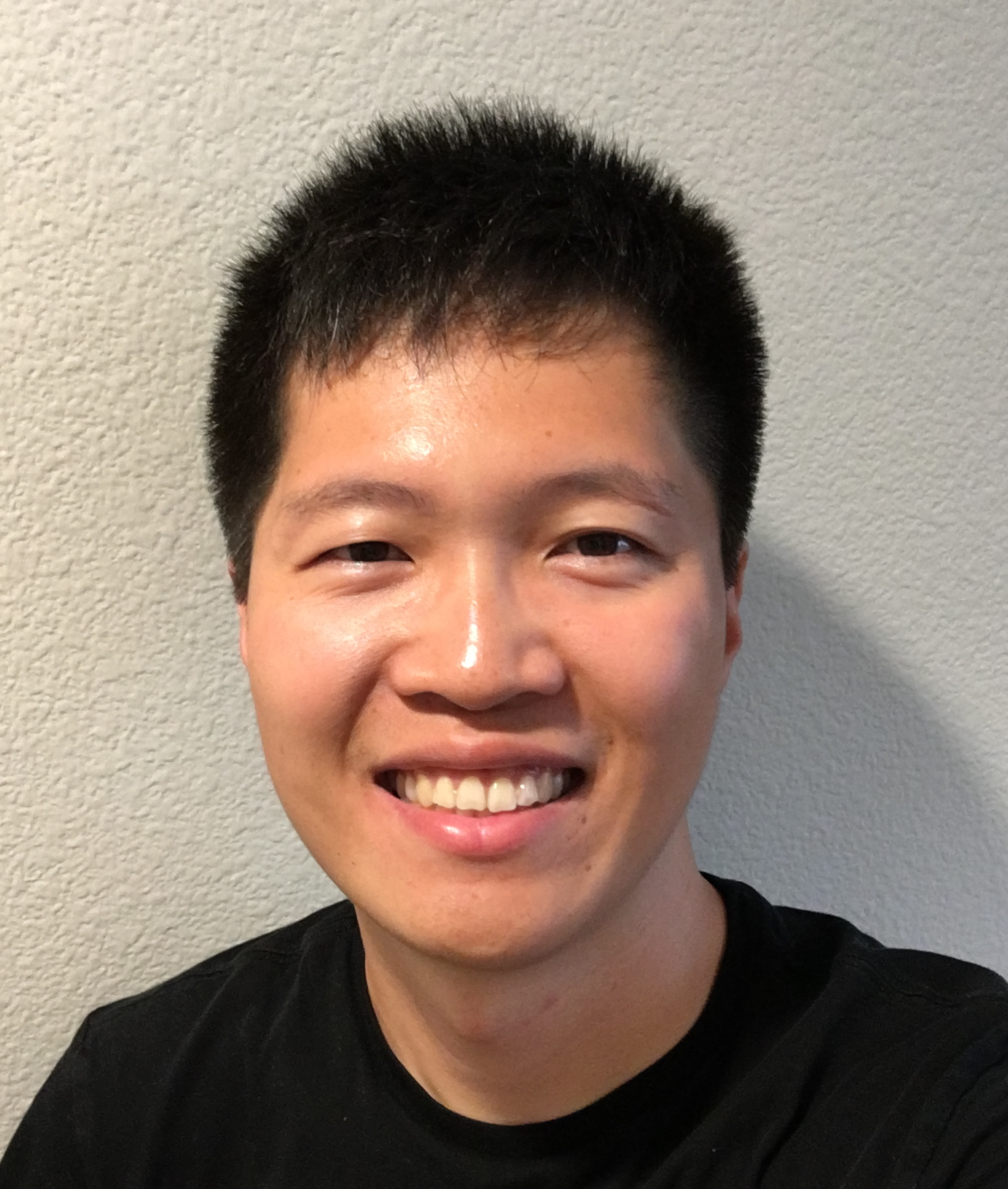

Yu-Hsiang Lin

Email: hitr2997925@gmail.com |

I am a software engineer at Meta. I enjoy working on scientific and engineering projects in machine learning, mostly natural language processing and large language models (LLMs) recently, and product search in the past.

In Amazon AGI and Alexa, I built various capabilities of LLMs including instruction following, creative writing, retrieval-augmented generation, and prompt orchestration. I worked on several aspects of model building such as supervised fine-tuning, alignment tuning (RLHF, PPO, reward modeling, DPO), curriculum learning, human preference evaluation, LLM-as-a-judge evaluation, model-in-the-loop self-instruct data generation, self-critique-and-revise data improvement, prompt engineering, etc. Please see my projects for more details.

In Amazon Search, I built neural language generative models to help customers find relevant new products, introduced graph neural networks and new model training methods to address the low-resource learning problem in product search, and developed the online ranking features to improve the search performance in long-tail queries. I also built the data pipeline that generates online A/B testing metrics used by all teams across Amazon, and a feature build that publishes the new product features to the search index. During my internship I developed the two-phase ranking model for Amazon Business.

I had my master in the Language Technologies Institute at Carnegie Mellon University and Ph.D. in physics in National Taiwan University. My master training focused on machine learning, deep learning, natural language processing, and distributed systems. My Ph.D. research was on high-energy physics and astrophysics.

At Carnegie Mellon University I worked on topics in natural language processing including cross-lingual transfer for low-resource languages, advised by Graham Neubig (ACL 2019). I also worked on reinforcement learning, generative adversarial networks, and structured prediction. At National Taiwan University I worked with Chih-Jen Lin on the second-order optimization methods for deep neural networks (Neural Computation 2018).